Dec 4, 2025

Introducing Ultravox v0.7, the world’s smartest speech understanding model

Zach Koch

Today we’re excited to release the newest version of the Ultravox speech model, Ultravox v0.7. Trained for fast-paced, real-world conversation, this is the smartest, most capable speech model that we’ve ever built. It’s the leading speech model available today, capable of understanding real-world speech (background noise and all!) without the need for a separate transcription process.

Since releasing and sharing the first version of the Ultravox model over one year ago, thousands of businesses from around the world have built and scaled real-time voice AI agents on top of Ultravox. Whether it’s for customer service, lead qualification, or just long-form conversation, we heard the same thing from everyone: you loved the speed and conversational experience of Ultravox, but you needed better instruction following and more reliable tool calling.

We’re proud to share that Ultravox v0.7 delivers on both of those without sacrificing the speed, performance, and conversational experience that users love (in fact, we improved inference performance by about 20% when running on our dedicated infrastructure).

One of the most important changes in v0.7 is our move to a new LLM backbone. We ran a comprehensive evaluation of the best open-weight models and ended up choosing GLM 4.6 to serve as our new backbone. Composed of 355B parameters and 160 experts per layer, it substantially outperforms Llama 3.3 70B across instruction following and tool calling. As always, the model weights are available on HuggingFace.

We’re also keeping the cost of using v0.7 on Ultravox Realtime (our platform for building and scaling voice AI agents) the same at $.05/min. That’s state-of-the-art model performance with speech understanding and speech generation (including ElevenLabs and Cartesia voices) for a price that is half the cost of most providers.

You can get started with v0.7 today either through the API or through the web interface.

Frontier Speech Understanding

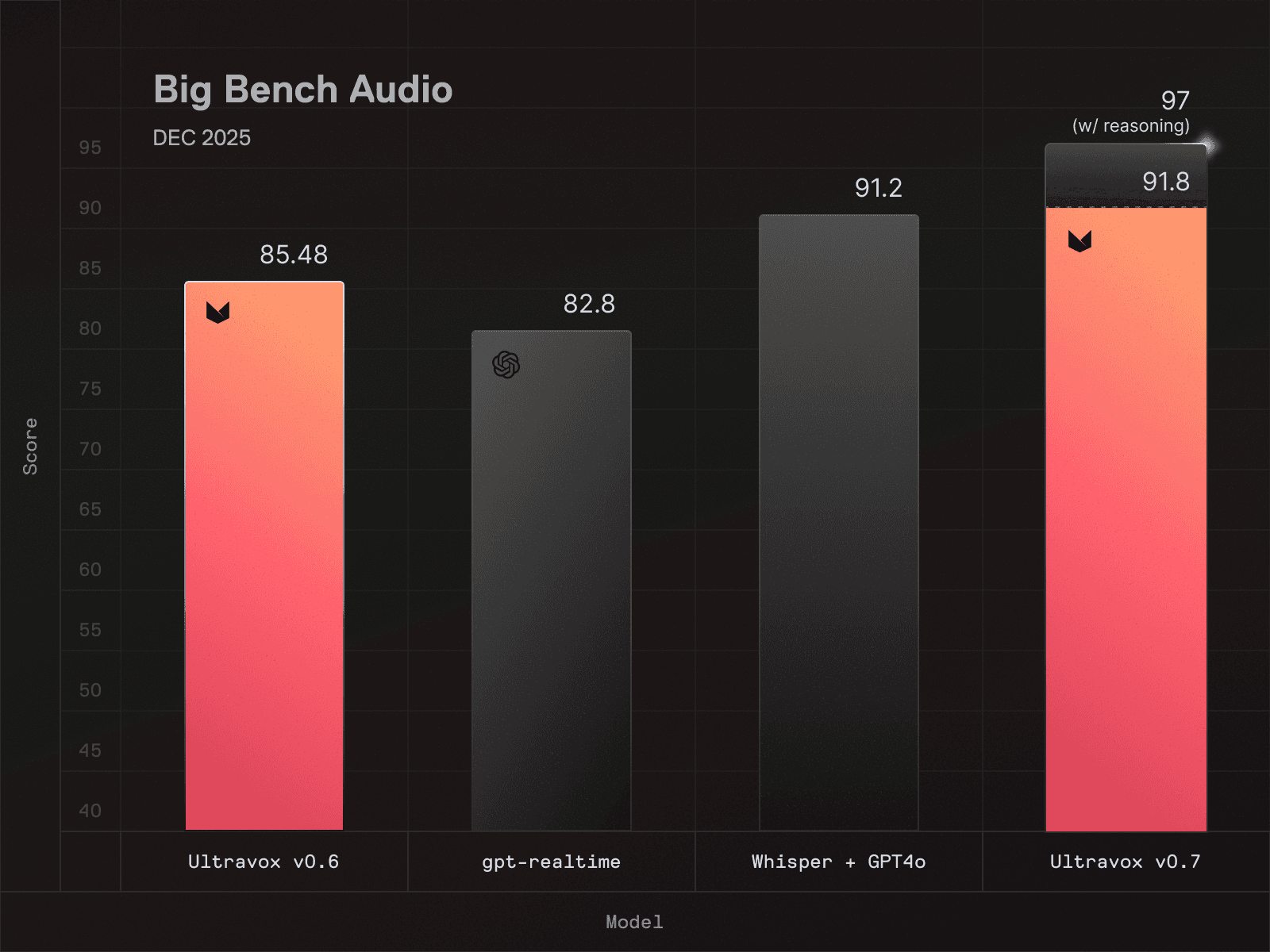

Ultravox v0.7 is state-of-the-art on Big Bench Audio, scoring 91.8% without reasoning and an industry-leading 97% with thinking enabled.

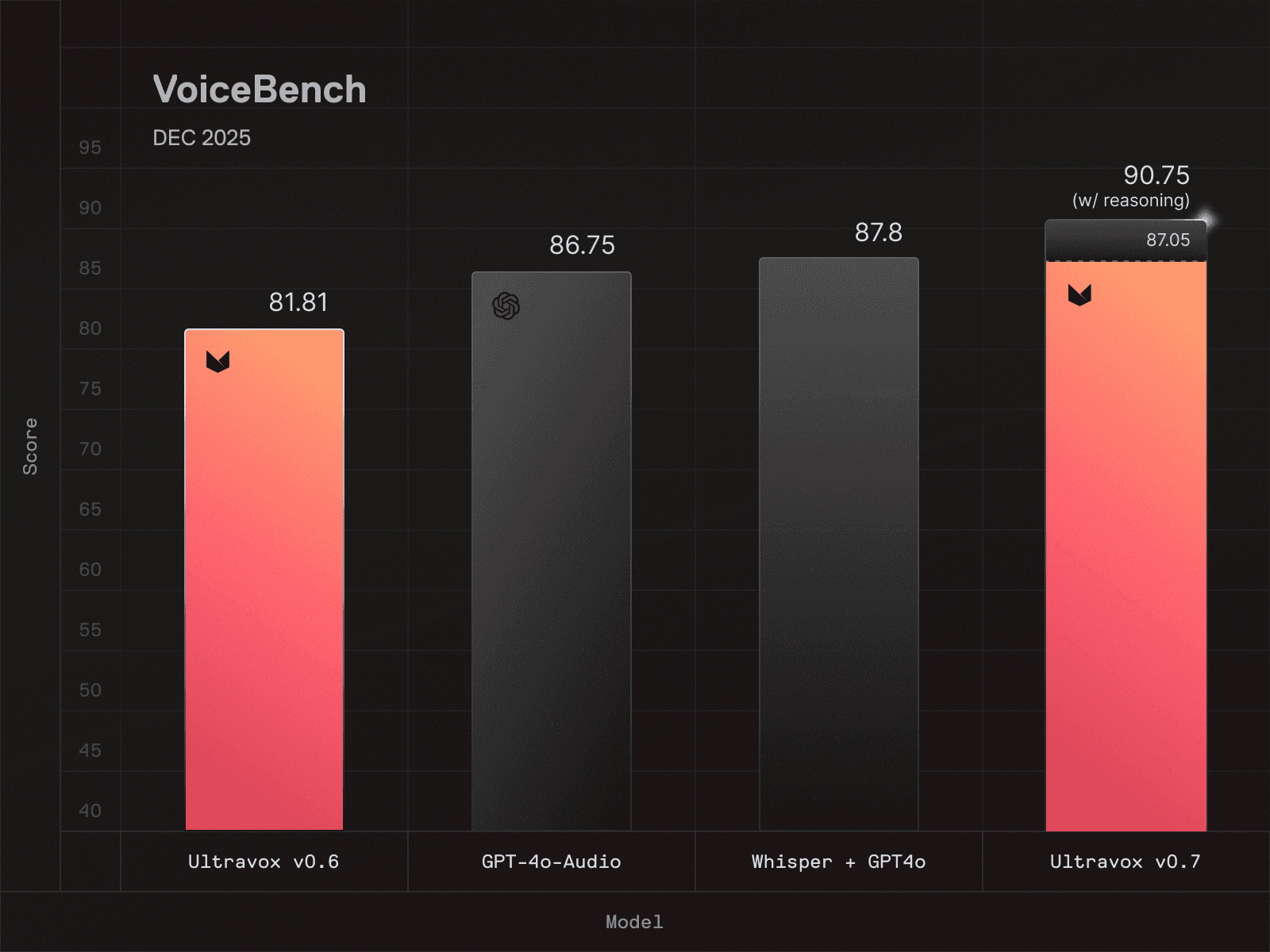

On VoiceBench, v0.7 (without reasoning) ranks first among all speech models. When reasoning is enabled, it extends its lead even further, outperforming both end-to-end models and ASR+LLM component stacks.

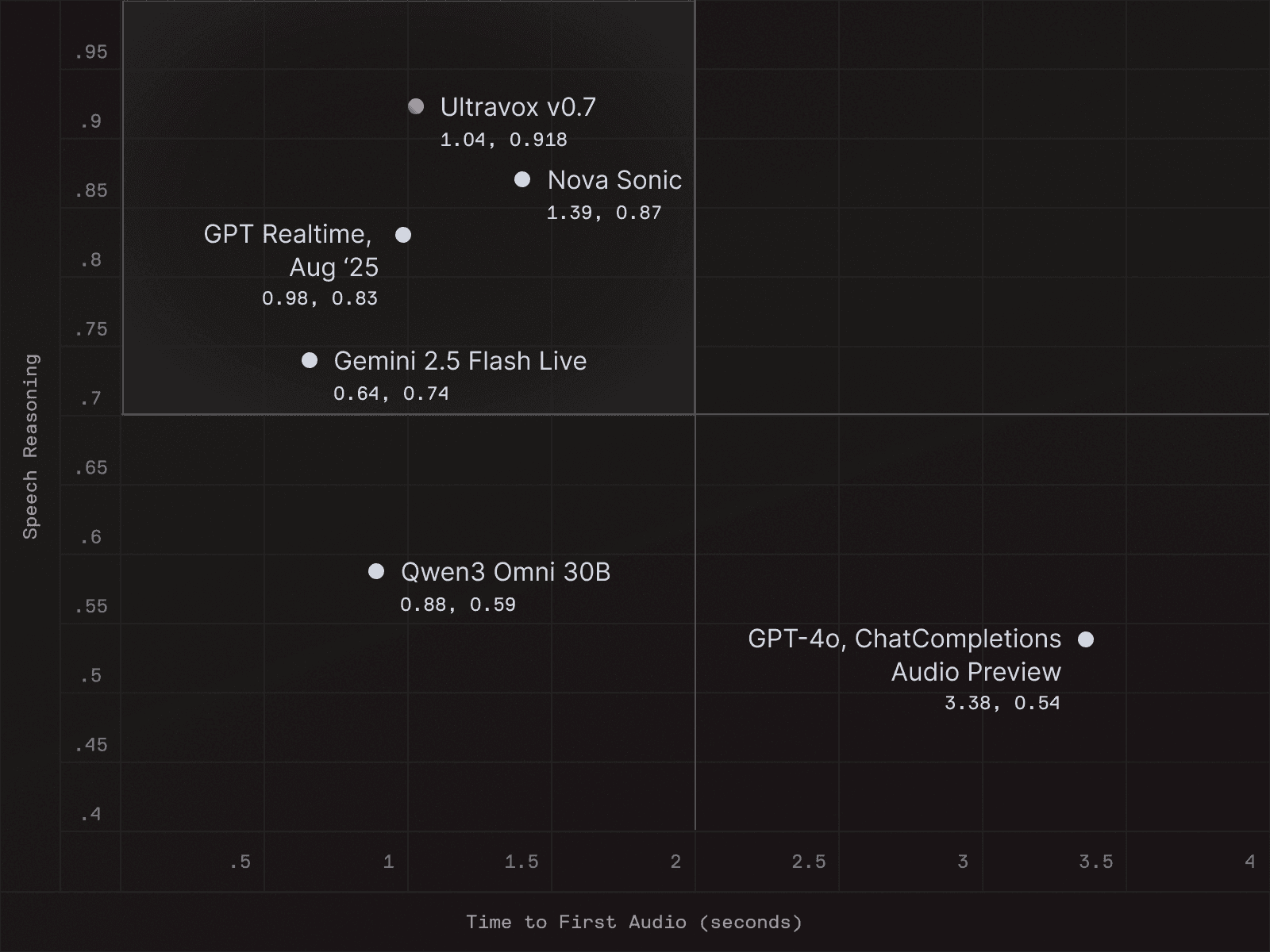

When measured for speed, the Ultravox model running on our dedicated infrastructure stack for Ultravox Realtime achieves speech-to-speech performance that is on-par or better than equivalent systems:

Numbers taken from Artificial Analysis' Speech-to-Speech "Speech reasoning vs. Speed" [source]

Ultravox Realtime Platform Changes

Ultravox v0.7 will become the new default model on Ultravox Realtime starting December 22nd, but you can start using it today by selecting ultravox-v0.7 in the web UI or setting the model param explicitly when creating a call via the API.

This default model update will affect all agents using fixie-ai/ultravox as well as agents with unspecified model strings. If you plan to migrate to this model version, we recommend starting testing as soon as possible, as you may need to adjust your prompts to get the best possible experience with your agent after the model transition.

While we think Ultravox v0.7 is the best-in-class option, we’ll continue to make alternate models available for users who need them. So if you’re happy with your agent’s performance using Ultravox v0.6 (powered by Llama 3.3 70B) and don’t plan to change, or if you just need more time for testing before migrating to Ultravox v0.7, you’ll be able to decide when (or if) your agent starts running on the latest model.

You can continue using the Llama version of Ultravox (ultravox-v0.6), but you’ll need to manually set the model string to continue using it. If your agent is already configured to use fixie-ai/ultravox-llama3.3-70b or ultravox-v0.6, then no changes are required–your agent will continue to use the legacy model after December 22.

We’ll also be deprecating support for Qwen3 due to a combination of low usage and sub-optimal performance (GLM 4.6 outperforms Qwen3 across all our tested benchmarks). Users will need to migrate calls and/or agents to another model by December 21 in order to avoid an interruption in service. Gemma3 will continue to operate as it does currently.